DaemonSet

전체 노드에서 특정 Pod가 한 개씩 실행되도록 보장

각 노드에 동일한 워크로드 (ex: 로그 수입기, 모니터링 에이전트)와 같은 프로그램 실행 시 적용

DaemonSet의 주요 특징

- 모든 노드에서 실행 보장:

- DaemonSet은 클러스터의 모든 노드에 동일한 Pod를 하나씩 실행 한다.

- 새 노드가 클러스터에 추가되면 DaemonSet은 자동으로 해당 노드에 Pod를 생성시킨다.

- 특정 노드에만 실행 가능:

- 라벨과 Node Selector를 사용해 특정 노드에서만 실행하도록 제한 가능.

- 노드에서 Pod 제거:

- 노드가 삭제되거나 DaemonSet이 수정되면 Pod도 자동으로 제거.

- 자동 관리:

- 노드 추가, 삭제, 스케줄링 등 모든 작업을 DaemonSet이 자동으로 처리.

DaemonSet의 사용 사례

- 로그 수집:

- 각 노드에서 로그를 수집하여 중앙 집중화된 로깅 시스템으로 전달.

- 예: Fluentd, Logstash.

- 각 노드에서 로그를 수집하여 중앙 집중화된 로깅 시스템으로 전달.

- 모니터링 에이전트:

- 노드와 컨테이너 상태를 모니터링하는 에이전트 실행.

- 예: Prometheus Node Exporter, Datadog Agent.

- 노드와 컨테이너 상태를 모니터링하는 에이전트 실행.

- 네트워킹:

- 네트워크 구성 또는 라우팅 작업을 처리하는 Pod 실행.

- 예: CNI 플러그인, DNS Resolver.

- 네트워크 구성 또는 라우팅 작업을 처리하는 Pod 실행.

- 노드별 작업:

- 모든 노드에서 반드시 실행해야 하는 특정 작업 수행.

- 예: 보안 검사 에이전트.

- 모든 노드에서 반드시 실행해야 하는 특정 작업 수행.

deploy된 모든 DaemonSet 확인

[master ~]$ kubectl get ds -A

NAMESPACE NAME DESIRED CURRENT READY UP-TO-DATE AVAILABLE NODE SELECTOR AGE

kube-flannel kube-flannel-ds 1 1 1 1 1 <none> 3m33s

kube-system kube-proxy 1 1 1 1 1 kubernetes.io/os=linux 3m34s

[master ~]$ kubectl describe daemonsets.apps kube-flannel-ds -n kube-flannel

Name: kube-flannel-ds

Selector: app=flannel,k8s-app=flannel

Node-Selector: <none>

Labels: app=flannel

k8s-app=flannel

tier=node

Annotations: deprecated.daemonset.template.generation: 1

Desired Number of Nodes Scheduled: 1

Current Number of Nodes Scheduled: 1

Number of Nodes Scheduled with Up-to-date Pods: 1

Number of Nodes Scheduled with Available Pods: 1

Number of Nodes Misscheduled: 0

Pods Status: 1 Running / 0 Waiting / 0 Succeeded / 0 Failed

Pod Template:

Labels: app=flannel

k8s-app=flannel

tier=node

Service Account: flannel

Init Containers:

install-cni-plugin:

Image: docker.io/flannel/flannel-cni-plugin:v1.2.0

Port: <none>

Host Port: <none>

Command:

cp

Args:

-f

/flannel

/opt/cni/bin/flannel

Environment: <none>

Mounts:

/opt/cni/bin from cni-plugin (rw)

install-cni:

Image: docker.io/flannel/flannel:v0.23.0[master ~]$ kubectl get nodes

NAME STATUS ROLES AGE VERSION

master Ready control-plane 3h27m v1.27.2

node2 Ready <none> 132m v1.27.2

node3 Ready <none> 42m v1.27.2

node4 Ready <none> 42m v1.27.2

# 워커 노드 삭제

[master ~]$ kubectl delete nodes node4

node "node4" deleted

[master ~]$ kubectl get nodes

NAME STATUS ROLES AGE VERSION

master Ready control-plane 3h35m v1.27.2

node2 Ready <none> 140m v1.27.2

node3 Ready <none> 50m v1.27.2

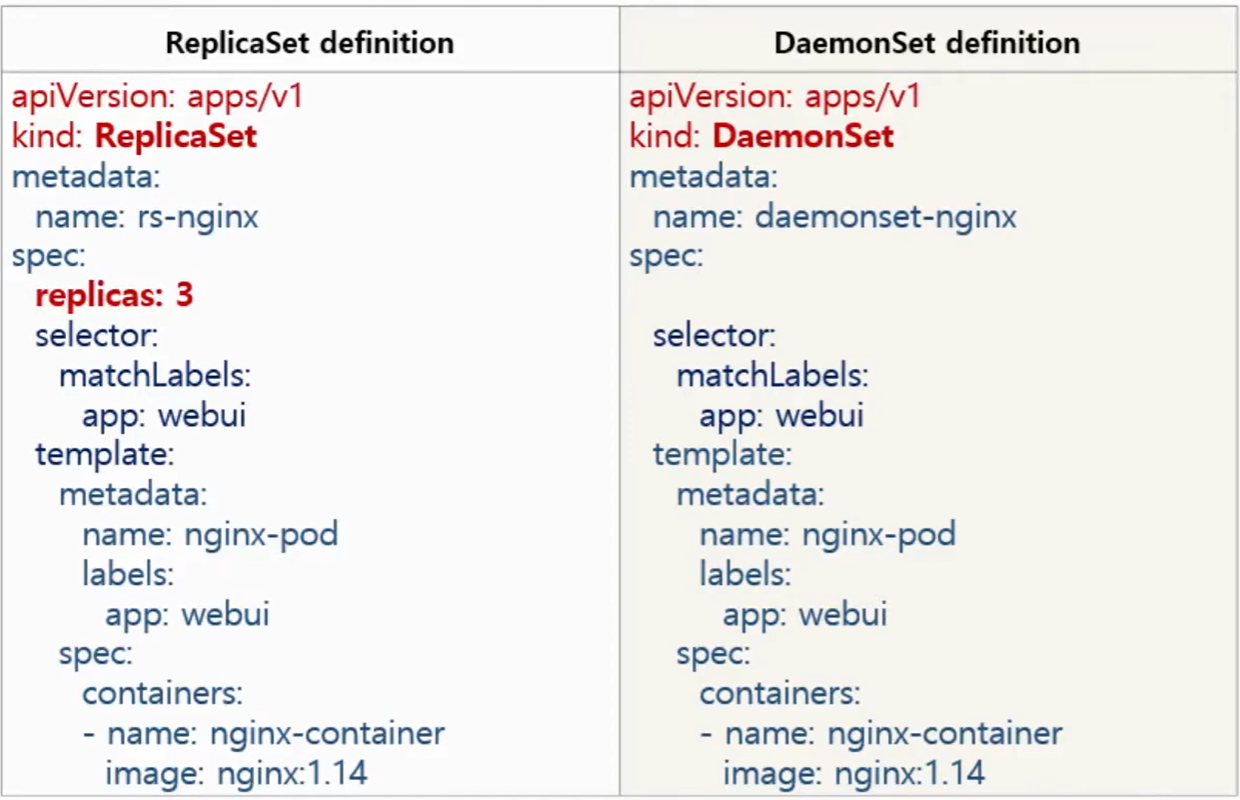

[master ~]$ cat > daemonset-exam.yaml

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: daemonset-nginx

spec:

selector:

matchLabels:

app: webui

template:

metadata:

name: nginx-pod

labels:

app: webui

spec:

containers:

- name: nginx-container

image: nginx:1.14

[master ~]$ kubectl create -f daemonset-exam.yaml

daemonset.apps/daemonset-nginx created

[master ~]$ kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

daemonset-nginx-ppgll 1/1 Running 0 12s 10.5.2.5 node3 <none> <none>

daemonset-nginx-wntfs 1/1 Running 0 12s 10.5.1.5 node2 <none> <none>

# 워커노드 다시 추가하기

# token 생성

# kubeadm token create --tth [시간]

[master ~]$ kubeadm token list

TOKEN TTL EXPIRES USAGES DESCRIPTION EXTRA GROUPS

c42htv.p6ltpxh8m2tjwghu 20h 2024-02-27T02:56:44Z authentication,signing The default bootstrap token generated by 'kubeadm init'. system:bootstrappers:kubeadm:default-node-token

[master ~]$ kubeadm token create --ttl 1h

vkytah.x0y8bgq16ihf8v5z

[master ~]$ kubeadm token list

TOKEN TTL EXPIRES USAGES DESCRIPTION EXTRA GROUPS

c42htv.p6ltpxh8m2tjwghu 20h 2024-02-27T02:56:44Z authentication,signing The default bootstrap token generated by 'kubeadm init'. system:bootstrappers:kubeadm:default-node-token

vkytah.x0y8bgq16ihf8v5z 59m 2024-02-26T07:36:18Z authentication,signing <none> system:bootstrappers:kubeadm:default-node-token

# node4에서 kubeadm reset 실행

[node4 ~]$ kubeadm reset

W0226 06:37:47.439686 26715 preflight.go:56] [reset] WARNING: Changes made to this host by 'kubeadm init' or 'kubeadm join' will be reverted.

[reset] Are you sure you want to proceed? [y/N]: y

...

Run 'kubectl get nodes' on the control-plane to see this node join the cluster.

[node4 ~]$ kubeadm join 192.168.0.18:6443 --token vkytah.x0y8bgq16ihf8v5z --discovery-token-ca-cert-hash sha256:7099406672b8b15a79d1286859b7a1c29a3797afa8d1a81a8d6e0dbd182a0396

W0226 06:40:54.273866 582 initconfiguration.go:120] Usage of CRI endpoints without URL scheme is deprecated and can cause kubelet errors in the future. Automatically prepending scheme "unix" to the "criSocket" with value "/run/docker/containerd/containerd.sock". Please update your configuration!

# 다시 node4 추가된 것 확인

[master ~]$ kubectl get nodes

NAME STATUS ROLES AGE VERSION

master Ready control-plane 3h44m v1.27.2

node2 Ready <none> 150m v1.27.2

node3 Ready <none> 60m v1.27.2

node4 Ready <none> 41s v1.27.2

# 노드당 1개의 Pod 보장! ## 중요

[master ~]$ kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

daemonset-nginx-7frwz 1/1 Running 0 41s 10.5.4.3 node4 <none> <none>

daemonset-nginx-ppgll 1/1 Running 0 8m28s 10.5.2.5 node3 <none> <none>

daemonset-nginx-wntfs 1/1 Running 0 8m28s 10.5.1.5 node2 <none> <none>

[master ~]$ kubectl delete pod daemonset-nginx-7frwz

pod "daemonset-nginx-7frwz" deleted

[master ~]$ kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

daemonset-nginx-2fnhc 1/1 Running 0 3s 10.5.4.4 node4 <none> <none>

daemonset-nginx-ppgll 1/1 Running 0 10m 10.5.2.5 node3 <none> <none>

daemonset-nginx-wntfs 1/1 Running 0 10m 10.5.1.5 node2 <none> <none>

# 즉, 로그/ 모니터링 에이전트에 적합한 컨트롤러다.

[master ~]$ kubectl get daemonsets.apps

NAME DESIRED CURRENT READY UP-TO-DATE AVAILABLE NODE SELECTOR AGE

daemonset-nginx 3 3 3 3 3 <none> 12m

# Daemonset 통해서 rolling update가 가능하다

[master ~]$ kubectl edit daemonsets.apps daemonset-nginx

daemonset.apps/daemonset-nginx edited

## edit 화면 참고

[master ~]$ kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

daemonset-nginx-5f8hh 1/1 Running 0 4s 10.5.1.6 node2 <none> <none>

daemonset-nginx-6q4rq 1/1 Running 0 6s 10.5.2.6 node3 <none> <none>

daemonset-nginx-t7kwx 0/1 ContainerCreating 0 2s <none> node4 <none> <none>

[master ~]$ kubectl get daemonsets.apps

NAME DESIRED CURRENT READY UP-TO-DATE AVAILABLE NODE SELECTOR AGE

daemonset-nginx 3 3 2 3 2 <none> 13m

[master ~]$ kubectl get daemonsets.apps

NAME DESIRED CURRENT READY UP-TO-DATE AVAILABLE NODE SELECTOR AGE

daemonset-nginx 3 3 3 3 3 <none> 13m

[master ~]$ kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

daemonset-nginx-5f8hh 1/1 Running 0 16s 10.5.1.6 node2 <none> <none>

daemonset-nginx-6q4rq 1/1 Running 0 18s 10.5.2.6 node3 <none> <none>

daemonset-nginx-t7kwx 1/1 Running 0 14s 10.5.4.5 node4 <none> <none>

[master ~]$ kubectl describe pod daemonset-nginx-5f8hh

Name: daemonset-nginx-5f8hh

Namespace: default

Priority: 0

Service Account: default

Node: node2/192.168.0.17

Start Time: Mon, 26 Feb 2024 06:46:39 +0000

Labels: app=webui

controller-revision-hash=6459b7498d

pod-template-generation=2

Annotations: <none>

Status: Running

IP: 10.5.1.6

IPs:

IP: 10.5.1.6

Controlled By: DaemonSet/daemonset-nginx

Containers:

nginx-container:

Container ID: containerd://fed8be33d34d2ebf29596f36a08f9dadfe079702a1eb3406afd6f06bb3c532ad

Image: nginx:1.15

Image ID: docker.io/library/nginx@sha256:23b4dcdf0d34d4a129755fc6f52e1c6e23bb34ea011b315d87e193033bcd1b68

Port: <none>

Host Port: <none>

State: Running

# rollback도 가능하다

[master ~]$ kubectl rollout undo daemonset daemonset-nginx

daemonset.apps/daemonset-nginx rolled back

[master ~]$ kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

daemonset-nginx-5f8hh 1/1 Running 0 2m50s 10.5.1.6 node2 <none> <none>

daemonset-nginx-6q4rq 1/1 Terminating 0 2m52s 10.5.2.6 node3 <none> <none>

daemonset-nginx-mrdnl 1/1 Running 0 2s 10.5.4.6 node4 <none> <none>

[master ~]$ kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

daemonset-nginx-gnfgc 1/1 Running 0 4s 10.5.1.7 node2 <none> <none>

daemonset-nginx-lszzs 1/1 Running 0 6s 10.5.2.7 node3 <none> <none>

daemonset-nginx-mrdnl 1/1 Running 0 8s 10.5.4.6 node4 <none> <none>

# 실습] > FluentD logging을 위한 DaemonSet Deploy하기

# Deployment를 활용해서 만들기

$ kubectl create deployment elasticsearch -n kube-system --image=registry.k8s.io/fluentd-elasticsearch:1.20 --dry-run=client -o yaml

apiVersion: apps/v1

kind: Deployment

metadata:

creationTimestamp: null

labels:

app: elasticsearch

name: elasticsearch

namespace: kube-system

spec:

replicas: 1

selector:

matchLabels:

app: elasticsearch

strategy: {}

template:

metadata:

creationTimestamp: null

labels:

app: elasticsearch

spec:

containers:

- image: registry.k8s.io/fluentd-elasticsearch:1.20

name: fluentd-elasticsearch

resources: {}

status: {}

$ kubectl create deployment elasticsearch -n kube-system --image=registry.k8s.io/fluentd-elasticsearch:1.20 --dry-run=client -o yaml > fluentd.yaml

# DaemonSet에 맞게 수정

$ vi fluentd.yaml

$ cat fluentd.yaml

apiVersion: apps/v1

kind: DaemonSet

metadata:

labels:

app: elasticsearch

name: elasticsearch

namespace: kube-system

spec:

selector:

matchLabels:

app: elasticsearch

template:

metadata:

labels:

app: elasticsearch

spec:

containers:

- image: registry.k8s.io/fluentd-elasticsearch:1.20

name: fluentd-elasticsearch

$ kubectl create -f fluentd.yaml

daemonset.apps/elasticsearch created

$ kubectl get ds -n kube-system

NAME DESIRED CURRENT READY UP-TO-DATE AVAILABLE NODE SELECTOR AGE

elasticsearch 1 1 1 1 1 <none> 116s

kube-proxy 1 1 1 1 1 kubernetes.io/os=linux 48m

Rolling Update

DaemonSet은 업데이트 시 Rolling Update 전략을 지원하여 기존 Pod를 순차적으로 교체한다.

updateStrategy:

type: RollingUpdate

rollingUpdate:

maxUnavailable: 1

# maxUnavailable: 동시에 비활성화될 수 있는 Pod 수(기본값: 1).

# 기존 Pod를 하나씩 교체하면서 새로운 Pod를 생성.

DaemonSet과 Deployment의 차이점

| DaemonSet | Deployment | |

| 주요 목적 | 각 노드에 하나의 Pod 실행 보장 | 지정된 수의 Pod 실행 보장 |

| 스케줄링 방식 | 모든 노드 또는 특정 조건의 노드 | 노드에 관계없이 스케줄링 |

| 사용 사례 | 로그 수집, 모니터링, 네트워크 에이전트 등 | 애플리케이션, 서비스 등 일반 워크로드 |

| Pod 개수 | 노드 수와 동일 | 사용자가 직접 지정 |

# yaml 생성 시 deployment와 비슷하기 때문에 kubectl create deployment를 활용해서 생성한다.

$ kubectl create deployment elasticsearch -n kube-system --image registry.k8s.io/fluentd-elasticsearch:1.20 --dry-run=client -o yaml > fluentd.yaml

$ vi fluentd.yaml

apiVersion: apps/v1

kind: DaemonSet

metadata:

creationTimestamp: null

labels:

app: elasticsearch

name: elasticsearch

namespace: kube-system

spec:

selector:

matchLabels:

app: elasticsearch

template:

metadata:

creationTimestamp: null

labels:

app: elasticsearch

spec:

containers:

- image: registry.k8s.io/fluentd-elasticsearch:1.20

name: fluentd-elasticsearch

resources: {}

$ kubectl create -f fluentd.yaml

daemonset.apps/elasticsearch created

$ kubectl get ds -n kube-system

NAME DESIRED CURRENT READY UP-TO-DATE AVAILABLE NODE SELECTOR AGE

elasticsearch 1 1 1 1 1 <none> 28s

kube-proxy 1 1 1 1 1 kubernetes.io/os=linux 19m반응형

'Container > Kubernetes' 카테고리의 다른 글

| [K8S] Job Controller (0) | 2024.02.27 |

|---|---|

| [K8S] Statefulset (0) | 2024.02.26 |

| [K8S] Deployment - RollingUpdate (0) | 2024.02.21 |

| [K8S] ReplicaSet (0) | 2024.02.21 |

| [K8S] ReplicationController (0) | 2024.02.19 |